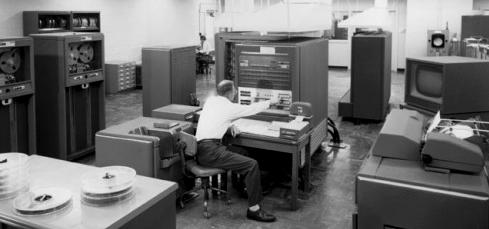

In my youth, and indeed straight through college, the idea that I might someday own a "personal computer" would have been unthinkable. When I arrived at MIT in 1959, the computer in the "computation center" was an IBM 704 (shown above). Most of the boxes in the picture are part of the computer.

The 704 was replaced the following year by an IBM 7094, a transistorized version. But both the 704 and the 7094 occupied an entire room at MIT, with a raised floor to allow the large cables between the various units to be hidden out of sight. Cold air from the air-conditioners also ran under the flooring, rising up through the various units containing the electronics, to keep them from overheating.

Most people would have had no use for a computer anyway. What could you possibly do with one? They were used primarily for specialized scientific and engineering problems. I certainly don't want to go into the whole history of personal computers here. It's been covered in great detail in many other places (here's a Wikipedia entry, for example). Personal computers, once made possible by large scale integrated circuits that put many transistors onto a single chip, didn't get widely used until the introduction of the IBM personal computer in 1981, and then they were still considered to be business machines. They were not generally used at home, as there was still no real reason to have a personal computer in your home. Then the World Wide Web appeared ten years later, and it proved to be the application that the home personal computer had been waiting for.

Heathkit was a marvelous company that, until 1991, manufactured kits that allowed hobbyists to construct various sorts of electronic devices. My first major Heathkit projects were my first amateur radio transmitter, a DX-20, and then later a second transmitter, a Heathkit Apache (these are discussed a bit in my blog entry Amateur Radio). My computer was built after the Heathkit line was purchased by Zenith Data Systems. The computer could be bought assembled from Zenith, but it was also offered as a kit by Heathkit, for hobbyists to assemble themselves. Click the next link to see a great web page on Heathkit. People often talk these days about "building" their own computers. What they usually mean is assembling a computer from off-the-shelf board-level components such as a motherboard (containing the CPU chip), memory boards, a disk drive, and various input-output boards. These are put together into a case containing a power supply.

Linguistically, the initialism "PC" has taken an interesting turn. After a shakeout of initial manufacturers, the two major types of personal computer available were made by Apple and by IBM. The IBM architecture was more open, and so attracted a number of manufacturers making "IBM compatibles". Initially, the machines by Apple and those by IBM and their imitators were all called "personal computers". But IBM, in a display of arrogance that their position in the computer field allowed them at the time, called their machine simply "the IBM PC". When this name was accepted by the marketplace, the term "PC" became synonymous with the IBM architecture. Thus people will now ask, "Do you use a Mac, or a PC?". This implies, of course, that a Mac (meaning Macintosh, by Apple) is not a "PC", even though it is in fact a "personal computer". Back in the days when software was simpler, we took the attitude that software changes were extremely easy to make, whereas changing your hardware was difficult. After all, to make a software change, nothing physical needed to be altered. It was all just symbols on paper. But precisely because it's so easy to change, software quickly became extremely complicated. So now, the situation has reversed. We're running absolutely massive software "applications" which probably often contain code that's decades old, and we're running these applications on hardware that's typically less than three years old. It's now easy to change the hardware, and much harder to rewrite the software, a labor-intensive operation. Technology has brought us all sorts of products that we now routinely pay for that didn't even exist when I was younger. I have monthly bills for cable television (well, Verizon FiOS, actually), Internet service, cell phone service, and a cell phone data plan. My wife and I use separate computers, and these are generally replaced every three or four years, at a cost which is hardly trivial. And the investment is not just financial. Yesterday, I spent 2¼ hours upgrading my Dragon NaturallySpeaking dictation software (sold by the company Nuance), taking it from version 11 to version 12. Much of this time was spent on the phone with Nuance tech support, where I was ably assisted by a rep named Mimi (after waiting a half hour on their telephone queue to be connected to her in the first place). I needed help because I ran into a problem during my installation, and needed some guidance to help me recover from it. Note 2 I didn't even need to upgrade to version 12, as I was actually pretty happy with version 11 (I use this dictation software mostly to write my blog). But Nuance has improved Dragon NaturallySpeaking's recognition in every new version that they've released, and just in my limited experience dictating this particular blog entry, version 12 does seem to be making fewer errors than version 11. And reducing the number of dictation errors is the major factor in determining my overall text input speed. Everyone who uses a personal computer has had to learn a great deal about how their programs work. Microsoft's conceit seems to be an attitude that they know what you want to do even better than you do. With their default settings, Microsoft applications are constantly second-guessing your every move. They change spellings, "fix" word spacing and punctuation, change your capitalization, and so on. Apple's conceit is that the use of their software is entirely intuitive, and that hence users barely need any instruction at all. This is simply nonsense. The first time I dealt with an Apple computer, I was unable to figure out how to delete something. I have a Ph.D. in Computer Science from MIT, but it was not the slightest bit obvious to me that I needed to drag the icon representing what I was deleting over to a garbage can. For that matter, it was not even particularly obvious what the garbage can icon represented. The first time I picked up an iPod in an Apple Store, I was unable to scroll up and down through the menu on the screen. I tried every possible combination of operations I could think of, but nothing worked. Finally, I asked one of the helpful Apple representatives in the store. He told me I had to move my finger in a circle around the center control to move the selection highlight up and down. I really think I could have held that device in my hands for days without ever discovering by myself how to do a menu select. Way back in early Apple history, I saw a woman looking at a computer in an Apple Store. It may have been an Apple Lisa computer or something like that, one of their early machines, but it did use a mouse. The woman had never seen a computer mouse before. An Apple representative told her to slide the mouse around in order to move the arrow on the computer's screen, and then he left her to her own devices. I watched as she tried to get the arrow on the screen to move, but the motions she was making with the mouse were having absolutely no effect. At first I thought the mouse might not even be plugged in, but then I realized that the problem was that she was using it on a shiny desktop (the physical desktop, not the so-called "desktop" on the computer screen). The mouse used a rubber ball, as all computer mice did in those days, the optical mouse having yet to be invented. The ball was sliding along the desktop without rotating. I grabbed a nearby newspaper, and suggested that she place the mouse on top of it. I don't remember if this was before anyone had thought of the idea of a "mouse pad", or if the store just hadn't provided one. I then watched as she tried to move the arrow on the computer screen from the lower right corner to the upper left. To do that, she rotated the mouse 45 degrees to the left, and pushed it diagonally across the newspaper, clearly the direction she wanted the arrow to move. Of course, on the screen, the arrow moved straight up (think about it). I once again intervened, telling her that she had to hold the mouse in a constant orientation as she moved it - she wasn't allowed to rotate it. This was not something the Apple salesman had thought it necessary to tell her, but it was obviously something that she needed to learn. No, it's not obvious.

"After reading your recent article I recalled my own trouble with my new touch phone. I had bought one the night before attending the marriage party of a friend. When I was on my way to his house, I received a call from home. For the life of me I could not figure out how to receive it. All the previous phones I had had a dedicated physical button to receive a call. It was my first experience with a touch screen, and there were merely three physical buttons." To the left, the bottom half of my Samsung Galaxy S III smart phone, showing an incoming call (the top part of the screen identifies the caller). To receive the call, you have to touch the circled green telephone handset icon, and then "swipe" it to the right (that is, slide your finger to the right). This is indeed not obvious. I knew how to do it with my phone only because the store where I bought it helped me transfer my cellphone account to the new phone, made a test call, and showed me how to answer it.

In fact, I recently heard a story about a young person who also had a problem answering a phone. It was an old-fashioned phone like the one shown in the picture, and it was ringing in a school classroom. One of the students asked the teacher how to answer it - he was looking for a button to press. Hey, kids, you just pick up the handset - it's called going "off hook". One reason I decided to build my first personal computer from a kit was so that I could understand it inside and out. As an engineer, I like to understand everything that I see. The kit provided electrical schematics of every board in the computer. The resident software needed to start the computer running, the so-called BIOS ("Basic Input-Output System") was included on a read-only memory chip, and a listing of that software was also provided. Nothing at all was mysterious or secret. An electrical engineer like me could understand it completely, top to bottom. With this start, I thought that I would be able to maintain this level of understanding as personal computers developed over time. Fat chance. First of all, things started to become trade secrets. IBM, unlike Zenith (the maker of my Heathkit computer), didn't provide a software listing of their BIOS. Secondly, the chips in the computer started getting more and more complicated. And the size and complexity of the software applications run on the computer skyrocketed, until it became extremely difficult to stay on top of it all.

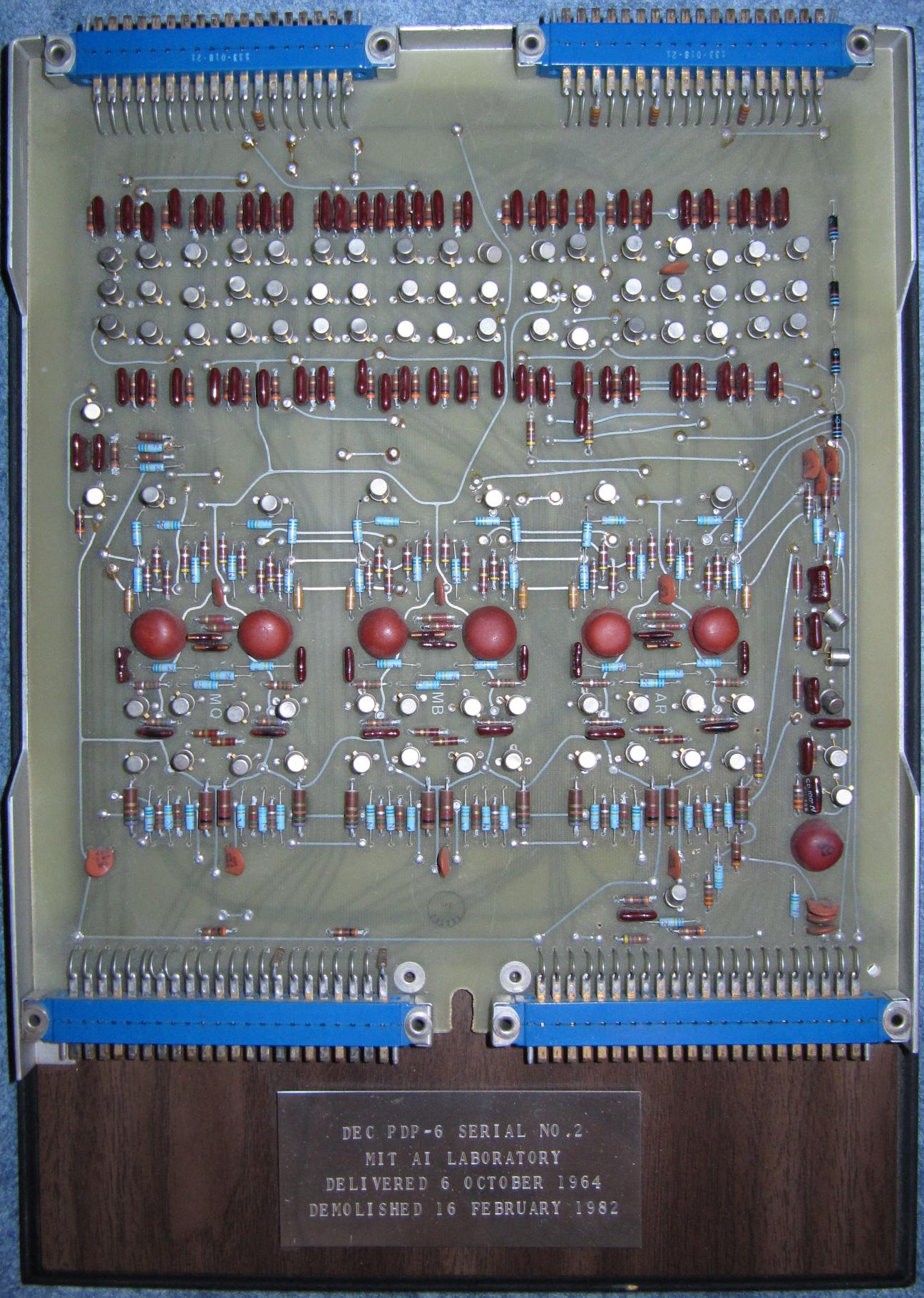

One day, while waiting for some software application to install on my machine, he took a look at a large printed circuit board I had hanging on my office wall, mounted on a plaque. The plaque contained one printed circuit board (out of many) from a Digital Equipment Corporation PDP-6 mainframe computer built in 1964. Click on its image to the left if you want to see an enlarged version. You can then click again to enlarge it even further, and then use your browser's "Back" button to return here. The plaque had been given to me by Prof. Gerald Sussman when the PDP-6 had been demolished in 1982, because he and I had both worked with that computer when we were graduate students in Marvin Minsky's artificial intelligence laboratory. Since my co-worker from Kronos Tech Support seemed curious about it, I pointed out the three identical rectangular areas in the center of the board, each containing multiple discrete germanium transistors (the silver cans), pulse transformers (the brown circles), and other components. I told him that each of these rectangular areas on the board implemented a single flip-flop. And he said to me, "What's a flip-flop?". Now, a flip-flop (technically called a "bistable multivibrator") could be considered to be the single most important component of any piece of digital circuitry. It is one bit (one "binary digit") of any register in the computer (see my entry Bits). He was my go-to guy for any problems I had with my computer or with software running on my computer, but he didn't have the faintest idea how the computer actually worked. After all, you can drive a car without understanding how an engine operates. On the other hand, I was capable of designing a computer from scratch, and building one from a pile of components, but I knew few of the magic incantations necessary to fix problems. Nowadays, with smart phones, computers are even more "personal". We carry them around with us all the time. And I suspect that every time I look at a picture gallery on my smart phone, and simply flip my finger to move from one picture to the next, the processor in that phone does more computations than the IBM 704 did during its entire life at MIT.

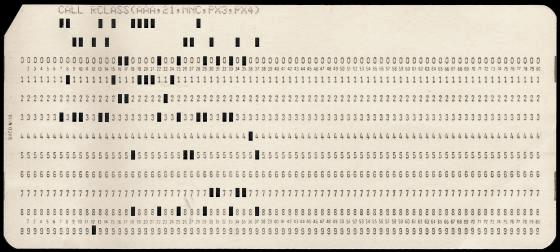

Note 1: Hollerith cards had 80 columns, each of which had 12 possible positions in which a rectangular hole could be punched. The combination of punched holes represented a specific character, which was often printed in readable form at the top of the column. Although these cards were pressed into service for use by computers, they were actually developed for earlier, much simpler "tabulating" equipment. Those devices were used to create, sort, process, and print the contents of Hollerith cards. Each individual punched card was considered to be a "unit record", containing one line of information. Many operations involved physically sorting the cards. Machines like the IBM 400 series "accounting machines" performed complex operations on the cards, and were in use throughout the 60s. These machines were "programmed" not by writing software, but by the use of wire jumpers plugged into removable control panels. To switch from one "program" to another, the user removed one plug panel and substituted a different one. Users got quite adept at programming these machines to do things that their IBM designers had not initially envisioned. In my youth, in my father's business office, I saw control panels which were not only dense with wires, but which also had electrical relays hanging from the panel. These relays were performing logic functions that were well beyond the sort of operations the machines had originally been designed for. You can learn more about tabulating machines here, a page which includes a picture of a control panel. [return to text] Note 2: I don't want to say too much about the details of my installation problems here, but I will mention they involved a required upgrade of Microsoft's ".NET" framework. For reasons too complex to go into, this operation hung up, failing to terminate after half an hour of operation, despite displaying a moving green "progress bar". When I clicked on "Cancel", the text said something like, "Do you really want to cancel your installation after the completion of your '.NET' upgrade?" (my emphasis added). In other words, the Cancel button had been provided by Nuance to allow me to cancel the Dragon NaturallySpeaking upgrade, but no means was provided to cancel the Microsoft .NET upgrade. And it was the Microsoft .NET upgrade that was hung up. There was absolutely no way to stop it. I was unable to stop it using the Microsoft Task Manager either. In fact, I had to physically turn off the computer. And when it came back on, it later turned out that the .NET upgrade was still "in process", in a sense, but at a later point in the installation, the Nuance installation finally DID provide a way of terminating it. Aren't computers wonderful? This is the sort of "Catch-22" that they put you in rather frequently. As some anonymous wag once put it, "To err is human, but to really screw things up requires a computer." [return to text]

|

The green telephone handset icon on the smart phone is interesting, because very few telephone handsets these days look anything like that. The icon is more like the shape of the handset on old-fashioned telephones like the dial-telephone seen to the right. There are probably young people around who have never seen one of these.

The green telephone handset icon on the smart phone is interesting, because very few telephone handsets these days look anything like that. The icon is more like the shape of the handset on old-fashioned telephones like the dial-telephone seen to the right. There are probably young people around who have never seen one of these.