#0045

#0045

Bits

Just what is "one bit of computer memory"?

These days, the capacity of a computer memory is generally measured in "bytes", and one "byte" is eight "bits". Note 1 Of course, one byte isn't worth much, so the word "byte" is modified by the usual metric prefixes. A "megabyte" is roughly one million (1,000,000) bytes, a "gigabyte" is about one billion (1,000,000,000) bytes, and so

on. Note 2 But each of these bytes comprises eight bits.

But what is "one bit" of memory? The word "bit" in this context is not the same word we use casually in everyday speech, as in, "There's a bit of pie left in the fridge." A "bit" of computer memory is precisely defined as the amount of memory needed to distinguish between two alternatives (the word originally stood for "binary

digit"). You could use one bit of memory to remember, for instance, whether or not it rained in Seattle on a particular date in April. One bit can be used because the answer is either YES or NO. Note 3

What's a common memory device that can store exactly one bit? Well, look below: an ordinary light switch. It has exactly two states, OFF and ON. There is a "set" operation that turns it from OFF to ON, and a "reset" operation that turns it from ON to OFF. And in between, it will retain ("in memory") whatever state it was last left in.

OFF state |

SET operation |

ON state |

RESET operation |

And that's it. I'll bet you didn't know your house was full of one-bit, non-volatile memories (non-volatile means it retains its state even in the absence of power). I might mention that in the computer field, instead of labeling the two states OFF and ON, they are generally called "0" (zero) and "1" (one).

Of course, memories in actual computers are not the same as light switches. To explain how they are built, I need to get into what I call the "Double Bed Dual-Control Electric Blanket Effect".

Imagine you are sharing a double bed with someone (you may imagine anyone you'd like), and each of you has a controller which sets the temperature of your own side of the electric blanket. However, unbeknownst to you, your maid (you may imagine you have a maid) turned the blanket over (side to side) while making the bed, so that in fact your control adjusts your partner's side of the blanket, and vice-versa. What will happen in this situation?

You might both sleep peacefully for a while if both controls are set at a comfortable temperature. The system is in a symmetrical state, and is in equilibrium. But it's an unstable equilibrium. The slightest perturbation will cause trouble.

Suppose you feel a little hot. You respond by turning your control down a bit, which makes your partner feel cooler. When your partner responds by turning the opposite-side control up, you get even hotter, so you turn your control down even more. Eventually, your control is full off, yet you are roasting, and your partner's control is full on, but your partner is freezing (I recount this story based on actual experience, except for the maid). Here things stay until one of you says "What the hell's going on here?", and you figure it out and turn the blanket back over. Of course, had you originally felt a bit cool instead of hot, the same thing would have happened in reverse, ending up with you cold and your partner hot. The original situation is symmetrical, and so may end up in either of two stable states.

There is a common circuit, a fundamental part of all computers, that uses the "Double Bed Dual-Control Electric Blanket Effect" to hold one bit of memory. The circuit is formally called a "Bi-stable Multivibrator", but computer engineers refer to it as a "Flip-Flop" (no, it's not a bedroom slipper).

In a "Flip-Flop", instead of two people under a blanket, there are two transistor "inverters", which are amplifiers in which a low-voltage input produces a high-voltage output, and vice-versa. Let's call these two inverters "A" and "B". They are cross wired so that the output of A is connected to the input of B, and the output of B is connected to the input of A. As a result, the circuit has two stable states:

A output low and B output high

or

A output high and B output low.

In effect, the voltage level out of each inverter is equivalent to the temperature on each side of the blanket in my earlier example.

One of these states is called "0" and the other "1", and the Flip-Flop thus stores one "bit". Binary numbers are made out of multiple bits held in multiple Flip-Flops, and there you have the basis of all of computer technology. Well, there's a bit (!!) more to it than that, but it's a start.

Flip-flops are used to form the "registers" in the Central Processing Unit ("CPU") of a computer, which hold binary numbers for computation. These days, any flip-flop that's part of a microprocessor on a silicon chip is miniscule, less than a hundred nanometers wide, and there may be millions of them on the chip. But below is a photo of a single flip-flop circuit on the PDP-6 mainframe computer we used in the sixties in MIT's Artificial Intelligence lab. The "MB" designation (faintly visible in the center in soldered printed circuit etch) means it was part of the "Memory Buffer" register, used to briefly hold numbers being transferred between the CPU and the main memory. It took 36 such flip-flops, each on a separate printed circuit board (along with other flip-flops and other components), to comprise the entire register.

One single flip-flop in our PDP-6

I've put a ruler next to the circuit so you can see how large an area it uses on the printed circuit board - it's over 10 cm (4 inches) wide by 5.5 cm (2.2 inches) high (on my computer monitor, the picture is very close to actual size). The silvery circles are discrete transistors seen from above, and the big reddish circles are pulse transformers. Notice that although I described a basic flip-flop as requiring only two transistors (and you can indeed build one that way), an actual flip-flop used in a computer generally contains more than two, and other components as well. To give you an idea of where this board fit into the sweep of computer technology: the PDP-6 mainframe computer that contained this board was delivered in October, 1964.

Flip-flops were much too expensive to use in the hundreds of thousands to form a computer's "memory". Other technologies were used for that. Chief among them in the sixties were "core memories", where each bit of memory was held by a tiny donut of a ferrite material, that could be magnetized in one direction or the other. This was accomplished by passing an electrical current along a wire that ran through the hole in the ferrite donut, called a "core". The picture below is an example of an early core memory module. Each two-sided plane in the "stack" is 12.7 cm square (5x5 inches) not counting the external interconnections, and the 28-plane stack is 17.8 cm (7 inches) high.

Core memory stack, equivalent to 28,672 bytes

Contains 229,376 ferrite cores, organized as 4096 56-bit "words"

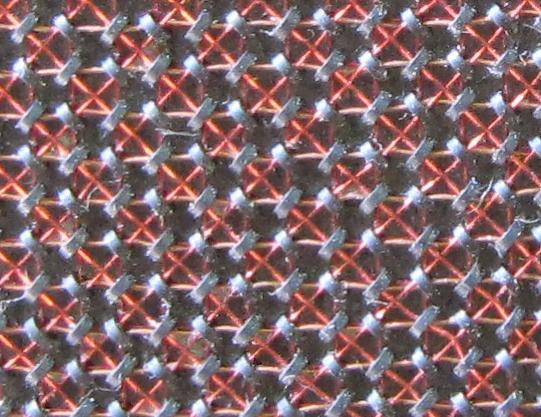

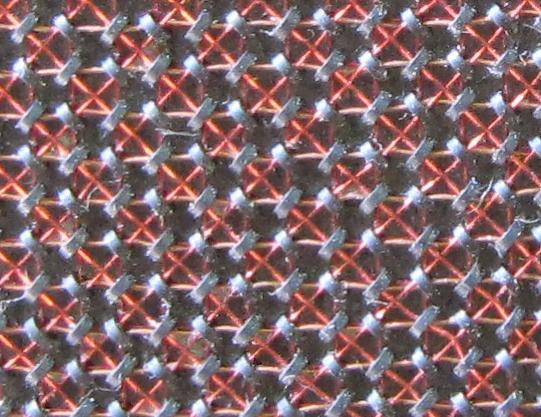

A close-up view of one of the core planes is shown below. Three wires are threaded through each core (when this memory was made, this was usually done by hand). The wires going from left to right (clearly visible) and from top to bottom (in the back, harder to see) were used to select a single core on the plane for reading and writing. The diagonal wire runs through every core on the plane, and is called the "sense" wire. This was called a "3-D array".

The cores in the above array are about 0.25 cm (0.1 inches) outside diameter

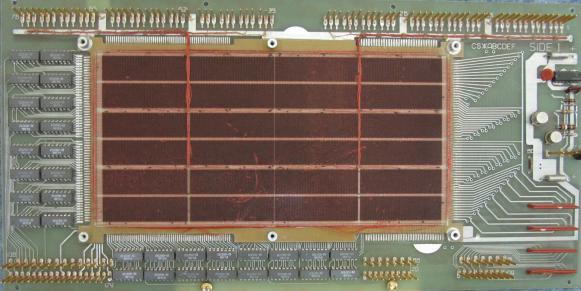

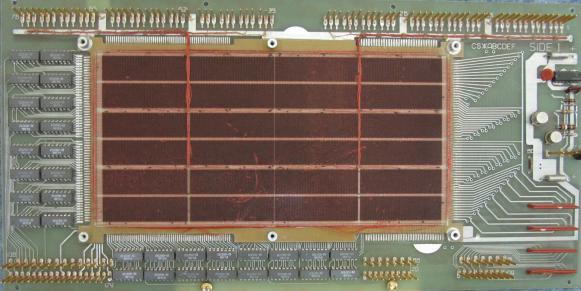

As the art was further developed, these memories got extremely dense. A later example is shown below. The entire memory unit is on a single large printed circuit board, 39 cm wide (15.375 inches) by 18.25 cm high (7.2 inches), but the section containing the cores is only 23.3 cm wide (9.2 inches) by 11.5 cm high (4.5 inches). Yet this memory module contains almost as much capacity as the much larger stack shown above (about 86 percent, to be exact). 12-bit "words" were used in Digital Equipment's PDP-1 and PDP-4 computers.

Core memory board, equivalent to 24,576 bytes

Contains 196,608 ferrite cores, organized as 16,384 12-bit "words"

Digital Equipment Corporation part number H-219B, ca. 1977

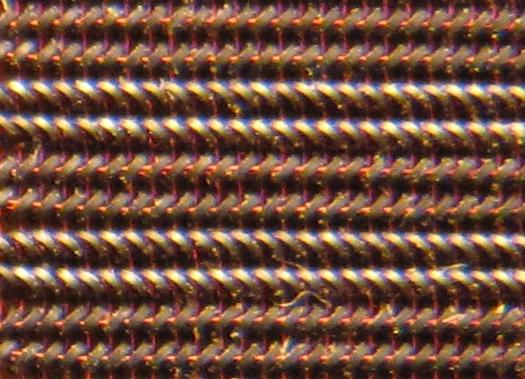

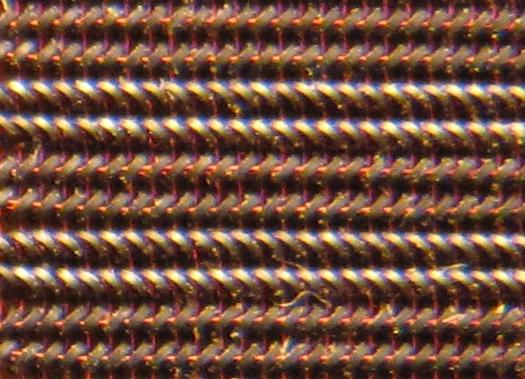

The greater density was achieved by using smaller cores, and packing them in really tightly, as shown below (only a small portion of the array is shown). Smaller cores could be used in part because a method was developed which only required two wires to pass through each core (eliminating the need for a separate sense wire). This was called a "2½-D" array. It also allowed the core planes to be assembled by machine.

The cores are 0.0457 cm (0.018 inches) outside diameter,

about a fifth the diameter of the cores shown two pictures up.

They are also clearly much more densely packed.

The largest core memory in my own personal experience was a memory made by the company Fabri-Tek that was installed on our PDP-10 in the Artificial Intelligence Laboratory at MIT. It held a quarter of a million "words" of memory (262,144 words, to be exact), where each "word" in a PDP-10 comprised 36 bits. In terms of the "byte oriented" memories of today, this is equivalent to a bit over 1.3 million bytes (substantially less than the memory card in your digital camera). It was contained in two cabinets, each about the size of a kitchen refrigerator.

It was actually built to store 40-bit "words", because the designers were afraid that some of the bit planes might not work, so they added four spare planes. Thus, it contained 10,485,760 individual ferrite cores. My blog entry Moby memory contains a few amusing stories about its installation and testing. In addition, the hackers in the AI lab put the extra four bits per word (all the planes worked) to good use, in a manner that might have influenced the future development of computer science (also discussed in that entry).

Nowadays, the bits in many of our computer memories are made of transistor circuits again. Of course, to make a large memory, each memory cell (representing one bit) is made to use as few transistors as possible. That, plus the very large scale integration that is now possible, allows us to put transistors by the billions onto a single silicon "chip". An eight gigabyte memory holds 68,719,476,736 transistorized memory cells. And each of these is a distinct physical flip-flop fabricated on the chip, just as each bit stored in a core memory was stored by magnetizing a specific individual ferrite core.

But your light switches store only one bit

each. Note 4

#0045

*TECHNOLOGY

Next in blog

Blog home

Help

Next in memoirs

Blog index

Numeric index

Memoirs index

Alphabetic index

© 2010 Lawrence J. Krakauer

Click here to send me e-mail.

Originally posted August 26, 2010, updated June 9, 2011 to reference Moby memory

#0045

*TECHNOLOGY

Next in blog

Blog home

Help

Next in memoirs

Blog index

Numeric index

Memoirs index

Alphabetic index

© 2010 Lawrence J. Krakauer

Click here to send me e-mail.

Originally posted August 26, 2010, updated June 9, 2011 to reference Moby memory

Footnotes (click [return to text] to go back to the footnote link)

Footnotes (click [return to text] to go back to the footnote link)

Note 1:

Since it's a bunch of "bits" considered together, it's called a "bite". But since it would have been too easy to confuse that word with the word "bit" itself, it was spelled with a "y" instead of an "i", making it a "byte".

A "byte" was not always eight bits. In our Digital Equipment PDP-10 computer, a "byte" was defined as any number of adjacent bits taken out of any desired position within a 36-bit "word". But when IBM ("International Business Machines") introduced its System 360 computer line, it fixed the size of a "byte" at eight bits. And due to IBM's power in the marketplace at the time, that definition stuck. [return to text]

Note 2:

Actually, since memories are "addressed" with binary (base 2) numbers, their sizes come in powers of two. 210 ("two to the tenth power"), which equals 1,024, happens to be close to 1,000. So a "Kilobyte" is actually defined as containing 1,024 bytes, not 1,000. Similarly, a "Megabyte" is 1,0242 = 1,048,576 bytes, and a "gigabyte" is 1,0243 = 1,073,741,824 bytes. By the way, some people pronounce the first "g" of "giga" as a "soft g" (as in gene), and some (like me) pronounce it hard (as in "good").

[return to text]

Note 3:

The word "bit" has a different definition when it's used as a unit of "information". In "Information Theory", one "bit" is the amount of information needed to distinguish not just between two alternatives, but between two equally likely alternatives. Consider: how much information have you gotten if you are told that it did not rain in Seattle on a particular date last April? If you figure that there may be a 50% chance of rain in Seattle in April, then "rain" and "no rain" are equally likely, and you've been given one bit of information, in the "Information Theoretic" sense of the word.

But how much information have you been given if you're told that it did not rain in the Sahara desert on that particular date? Information Theory captures, in a quantitative, mathematical sense, the idea that in that case, you have been given a lot less than one bit of information, since it almost never rains in the Sahara ("So tell me something I didn't already know!"). And that's all I'll say about the Information Theoretic sense of "one bit" for now.

"Information Theory" was originally developed by Claude Shannon, a professor at MIT. He had previously (in his Master's thesis!) proposed the use of Boolean Algebra in computer science. And as a student at MIT, I had the great fortune to have him as a professor in one of my courses. [return to text]

Note 4:

Light switches in the US are generally ON when UP, and OFF when DOWN. But I've noticed that the convention in France is the opposite - you usually push their switches DOWN to turn on a light.

[return to text]

|