The artificial intelligence Lab at MIT had a PDP-6 mainframe computer, and then later installed a PDP-10 computer, which ran the same instruction set. These machines had memories which were not organized into "bytes" like the machines of today. Rather, they had "words" of 36 bits, equivalent in length to 4½ "bytes". The memory technology of the time was core memory, as I described and showed in my blog entry "Bits". If I'm remembering correctly, when our PDP-6 was first installed, it had a memory of only 16K words. This was later increased to 64K. These memories are pathetically tiny by modern standards, and it's hard to believe that anything could actually be accomplished with these large machines with that amount of memory. Eventually, these memory sizes began to severely limit the research being done by the lab. The instruction set of the PDP-6 and PDP-10 enabled the machines to address up to "256K" words (to be precise, 262,144 words). The lab thus purchased a memory of that capacity, built by the company Fabri-Tek, which is what I'll be discussing in this entry. The cost at the time was a staggering $380,000. Note 2 After initially posting this page, I asked Richard Greenblatt what he remembered about the unit. He sent me a long message full of fascinating technical details, much too technical to be included here. He also reminded me that the unit occupied not just one, but two cabinets. He wrote, "The bay with the power supply and interface and that panel [shown at the top of this entry] was 19 inches [about 48 cm.] wide. The other bay, with the core stacks, was considerably wider, 40 inches [101.6 cm.], I'm pretty sure." I've incorporated Greenblatt's comments into this entry. Each "bit" in such a memory is implemented by an individual "core", which is a tiny toroid ("doughnut") made of a ferrite material with carefully chosen magnetic properties. A "zero" or a "one" is stored in the core by magnetizing it in one direction or the other. This is done by passing an electrical current along a wire which goes through the core. Actually, two wires go through the core, called the "X" and the "Y" wires. The ferrite material is fabricated so as to have a very sharp threshold for magnetization. Thus a precisely controlled electric current through one wire will not change the magnetization of the core. Current must be passing through both the "X" and the "Y" wires for the core to switch the direction of its magnetization. This is one of the mechanisms for selecting the desired core. A "plane" of 512 x 512 cores was needed for each bit of every computer "word". Since the computer had a 36-bit word, 36 such core planes were required. Note 3 The 36 core planes needed to be connected together in a "stack". An example of an older and much smaller memory was shown in my "Bits" blog entry (click here to see that picture in a separate window, then close it to return here). However, the core stack in the Moby Memory was much larger and denser, using considerably smaller cores, than the memory stack shown in my earlier blog entry. Once assembled into a stack, any repair of a core plane becomes impossible. Thus, although the core planes could be tested before being assembled together, any subsequent failure would destroy the entire memory. Perhaps because they feared this possibility, Fabri-Tek constructed the memory with 40 core planes rather than 36. This can actually be seen on the control panel, accounting for the four extra lights in the bottom row of the data read-out. There may have been other reasons why Fabri-Tek included the extra bits. Greenblatt wrote, "They probably had some hope to make this design into a product for the [IBM System] 360 as well as DEC computers, but, as far as I know, they never did." In any event, the extra bits included by Fabri-Tek may have had implications for future developments in computer science, as we'll see below. Because this memory was extremely large by the standards of the time, the denizens of the AI group called it the "Moby Memory". "Moby" was a word used in the lab to indicate anything large. This usage can be found in the jargon file that describes the slang used in the Artificial Intelligence group. "Moby" apparently is derived from "Moby Dick", the whale. I was in the lab when Fabri-Tek engineers arrived one day to install the memory. They set it up, and using the panel shown above, ran it through self testing. Automatic testing hardware built into the unit allow the memory to be filled with zeros, or ones, or a 01 checkerboard. They also allowed the memory to be filled with what was thought to be a "worst-case" pattern, that is, a pattern of ones and zeros that would stress the memory by producing worst-case cross talk between the bits. The memory passed all these tests, as it had passed them in the Fabri-Tek plant before being shipped out. The memory was then hooked up to our PDP-10 computer via its interface logic. Programs were written in the PDP-10 to test the memory, by writing simple patterns into the memory and then reading them back. Again, the memory passed the tests. On the other hand, the patterns being written into the memory were very uniform and simple. The programmers wondered what would happen in the real life situation in which the contents of the memory would be rather random. I believe it was Roland Silver, a mathematician, who thought of a better way to test the memory. This could be done with what's called a "pseudo-noise" sequence of numbers, often called a "PN-sequence". This is a way of generating a sequence of numbers that look quite random, but which in fact are generated in a systematic way. Roland wanted to help us fill the 262,144 words of the memory with 262,144 different numbers. This is easy to do by just filling the memory with numbers in sequence, but we wanted the numbers to be in a random looking order. Roland was aware that this could be done using a methodology known in data communications as a "Cyclic Redundancy Check" ("CRC"). This is a mathematical method that arises out of a somewhat esoteric branch of Linear Algebra, "prime polynomials over Galois field 2", the last part being abbreviated "GF(2)". The only trouble was that to generate a sequence as long as 262,144 words, we needed to know a prime polynomial in X18 (X to the 18th power). Nowadays, you can find these on the web Note 4 , but in the late 60s, they were not so easy to come by. However, Roland knew someone who worked at the NSA (The "National Security Agency", a US spy agency), which makes use of such esoteric mathematics in such fields as the generation and cracking of secret codes. So Roland called his friend, and I found myself listening to Roland's end of the conversation Roland started off with some small talk, "How's it going, Joe. How's Marge? Good to hear it." Note 5 Then he got to the point: "Say, Joe, I need a prime polynomial in X to the 18th." There was a long pause. "What's going on?", we asked Roland. He told us that Joe had immediately replied, "I've got to go see if it's classified." We observed that Roland's friend at the NSA had not answered that he didn't know such a polynomial. Apparently, this sort of information was a trivial matter to him, which he could probably reel off the top of his head. His only issue was whether or not it was a secret. After a pause, he came back on the phone, and Roland later recounted to us what he said. In a hoarse whisper, he said "X to the 18th plus X to the 7th plus 1". And that was, indeed, a prime polynomial. We never did find out whether it was unclassified, or whether he had been unable to quickly determine if it was classified or not, but he had told us anyway. Note 6 And so, a program was written based on the polynomial to fill the Fabri-Tek memory with two to the 18th different 18 bit numbers (I think they put the same 18 bit number in each half of the 36 bit word). And lo and behold, the memory failed this test. The numbers read back didn't always match what had been written. So the Fabri-Tek engineers started to work on finding out the source of the problem. By the end of the day, they were still unable to fix it. They canceled their flight reservations home, in order to stay overnight and continue working on the problem. As the days went by, they sent home for their pajamas and other supplies, and settled in for a long stay. The problem did not yield easily to their investigation. One day, while they were away at lunch, some wise guy in the lab found a bottle of small ferrite cores, and scattered them on the floor at the base of the memory. When the Fabri-Tek engineers returned, one of them saw the cores, and was momentarily terrified. Had the array actually been damaged, breaking wires and dropping cores to the floor underneath? Fortunately, he didn't die of a heart attack before realizing that the cores on the floor were actually the wrong size, and could not possibly have come from his memory array. The engineers did eventually figure out the trouble, and fortunately it turned out to not be a problem with the memory itself, but rather with the interface circuitry between the memory and the computer. Greenblatt wrote, "Finally, we called Fabritek and gave them an ultimatum. A Fabritek vice-president, an engineer who had been kicked upstairs some months previously, came out to talk about it, but we were going to send it back. However, it was winter time and his flight back to Minnesota was cancelled due to a snowstorm, so he figured he might as well take a crack at the problem. He fixed it. The problem, as I remember, involved a tiny bug in the exact timing of the X and Y signals, which was very complicated. As a result, just enough noise was coupled into the sense amplifier to sometimes cause lossage." By "lossage", Greenblatt means "errors" (see the jargon file). It's interesting to see that Greenblatt is still using AI Lab jargon about 45 years later. I recall at least one program written in LISP by Pete Samson that filled the entire Moby Memory. Note 7 The program computed an optimal route to cover the entire right-of-way of the New York City subway system in a minimal amount of time (about 25 hours, it turns out). It was used to update the route in real time as two riders made the trip - I describe my minor part in this project in the entry Amateur subway riding. I think most of the Moby Memory was also used to encode chess board positions in a giant "hash table", so that Greenblatt's chess program didn't have to re-evaluate a position that it had seen before. Finally, I alluded earlier to the possibility that the extra four bits in each word of the Moby Memory, which were included for redundancy, could have influenced the future path of computer science. Here's what happened: Given that four extra bits were available in each memory word, the hackers in the lab considered a way to access them and make use of them. One of the bits was used as a "parity" bit, to detect memory errors. They then considered allowing each individual memory word to be "write locked" - that is, to become read-only - if that bit was set. Another bit would have allowed program access to a word to generate a software interrupt. Both of those features could have been used for software debugging. But it was the proposed use of another of the extra bits that was most interesting - it was proposed to use it for a necessary operation in the LISP programming language called "garbage collection". LISP has a way of generating structures in memory that are no longer in use, and this memory needs to be gathered up and recycled (so to speak). Early LISP programs needed to be stopped for garbage collection when the memory filled up. Later, methods were developed to garbage collect in the background. Garbage collection involves tracking through all the "list structures" the program is using, and marking them as "in use". When that process is complete, all the memory words that have not been so marked can be gathered into a "free storage list" for re-use. But garbage collection became simpler, and much faster, when an extra bit attached to each word became available to indicate whether or not the word was in use, so the possibility was discussed of assigning one of the "extra" bits in the Moby Memory to that purpose. I thought that these proposals had actually been carried out, but Greenblatt recalls differently, and I'm sure he knew more about it than I did. He wrote, "We were certainly aware of those extra bits and tried to figure out a way to use them, but it never made sense, partly because of the need to keep using the original DEC type 163 16K-by-37-bit memory which didn't have those bits. Also, after we had timesharing, the extra bits would have had to be swapped to the disk, which would have been painful. I believe the Systems Concept disk control did in principle have a way to read those bits, but it was painful and I am pretty sure it was never even tried out. Allocating space on the disk for any extra bits would have been extremely painful too." But at some point in the late sixties and early seventies, it occurred to Greenblatt and Tom Knight that it could be useful to design a computer in which each piece of data in the memory had extra information attached. It turned out that this was particularly useful for programs written in the LISP language, which was widely used for research in Artificial Intelligence. The extra bits could be used to speed up garbage collection, but also for other purposes useful in running LISP programs (too technical to describe here).

The original "Moby Memory" described in this entry, the size of a couple of refrigerators, held 262,144 words of five bytes each (counting the extra four bits), for a total of 1,310,720 bytes. So the tiny microSD card holds more than 26,000 times as much as the Moby Memory. It is equally non-volatile - that is, like core memory, it retains its contents when the power has been turned off. In the core memory, each bit was stored in a single, discrete magnetic toroid. That was 10,485,760 separate little cores (since each byte comprises eight bits). Similarly, in the microSD card, each bit is stored in a single, discrete transistor circuit integrated onto one or more chips, for a total of 274,877,906,944 separate circuits. That's nearly 275 billion circuits in that tiny package. Now that's moby.

Note 1: There is an extremely faded slip from a fortune cookie taped to the panel that reads, "A good memory does not equal pale ink". I recall receiving a fortune at a restaurant in Boston's Chinatown, and pasting it on the memory. That fortune read "You will hear pleasant words which will remain in your memory." Someone apparently removed it from the front of the control panel at some point, and taped it to the interior of the control panel. I know this from the Computer History Museum's page describing the memory, which they now possess. Obviously, the fortunes are referring to human memories, not computer memories. The denizens of the Artificial Intelligence lab were great fans of Chinese food. Bill Gosper learned how to write culinary Chinese so that he could, in writing, order dishes that were not available on the English menus we were given. Chinese diners might speak either Mandarin or Cantonese, so it is not uncommon for Chinese restaurants to provide their patrons with small pads of paper on which to write their orders (the written characters are the same, regardless of the dialect spoken). PDP-10 programmers, and indeed the programmers of DEC machines in general, divided binary numbers into groups of three bits, and specified them in the octal (base 8) numbering system. Word sizes were generally multiples of three: 18 bits for the PDP-1 and PDP-4 minicomputers, 12 bits for the inexpensive PDP-8, and 36 bits for the PDP-6 and PDP-10 mainframes. The division of the bits into groups of three can be seen in the arrangement of the lights on the panel. The widespread use of hexadecimal numbers (base 16, dividing the bits into groups of four) came along with the byte-oriented memories of the IBM System 360, and continues to this day. Photo credit: Marcin Wichary, under a Creative Commons license. See his Flickr page. Although this photo was taken in 2008, this is the control panel from the memory used in the Artificial Intelligence Lab, now apparently in the Computer History Museum in Mountain View, California, as noted above in this note. The unit can be identified by the property tags on the panel, one being from the "MASS. INST. OF TECH." and another from the "MASSACHUSETTS INSTITUTE OF TECHNOLOGY DIVISION OF SPONSORED RESEARCH". To my knowledge, the memory used by the AI group was the only memory of this type ever used at MIT. [return to text] Note 2: Although "K" generally refers to 1,000 (based on the metric system prefix for "Kilo"), when talking about computer memories, it means 1,024. This is because digital computer memories are addressed in the binary system, and hence in numbers which are powers of two. And 210 = 1,024. Thus "16K" equals 16,384 and "64K" equals 65,536. And the "Moby Memory" discussed in this entry, containing "256K" words, actually had 262,144 of them. [return to text] Note 3: When magnetic core memories were first built, multiple wires were passed through each core. Eventually, a simpler design (called a "3-D" array) was arrived at in which only three wires were needed. Although the X and the Y wires could be threaded through the cores by machine, the third wire (called the "sense wire") had to be threaded by hand. This made construction of the memories extremely expensive. Very large memories were ultimately made possible by the development of an architecture that eliminated the sense wire. This type of core array was called a "2½-D array". That's the technology that was used by our Fabri-Tek memory. [return to text] Note 4: To see an example, click here, which will open a Google Books page in a new window. Close that window to get back here. [return to text] Note 5: These names are made up - I don't remember the actual names. [return to text] Note 6: It takes quite a bit of computation to compute a prime polynomial in X to the 18th, but it's very easy to verify one. You program up the PN-sequence based on the polynomial, and start it off with the value 000000000000000001. You then run the sequence. If it goes through 218-1 values before returning to its starting point, then the polynomial was prime. [return to text] Note 7:

Verified by Pete Samson - click here, and scroll down to "Comment by Pete Samson". [return to text]

|

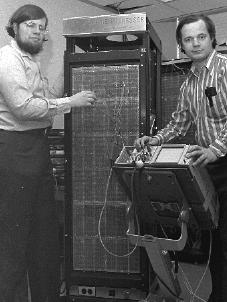

I've always suspected that Greenblatt and Knight's insight might have been influenced by having thought about using the extra bits associated with each word in the Moby Memory. Greenblatt and Knight started a project to design a machine specifically to run LISP programs, using what came to be called a "tagged architecture" (because each memory word is "tagged" with additional information not part of the actual datum it holds). The project ultimately became the "MIT Lisp Machine Project", and led to the machine shown to the left, with its developers Knight (left) and Greenblatt (right). The picture was taken at MIT in 1978. The project led to the creation of multiple competing "LISP machines". Two companies were spun out of the AI Lab: Greenblatt's LMI ("Lisp Machines Incorporated") and lab manager Russell Noftsker's Symbolics. The sometimes contentious history of these companies can be found documented elsewhere on the web.

I've always suspected that Greenblatt and Knight's insight might have been influenced by having thought about using the extra bits associated with each word in the Moby Memory. Greenblatt and Knight started a project to design a machine specifically to run LISP programs, using what came to be called a "tagged architecture" (because each memory word is "tagged" with additional information not part of the actual datum it holds). The project ultimately became the "MIT Lisp Machine Project", and led to the machine shown to the left, with its developers Knight (left) and Greenblatt (right). The picture was taken at MIT in 1978. The project led to the creation of multiple competing "LISP machines". Two companies were spun out of the AI Lab: Greenblatt's LMI ("Lisp Machines Incorporated") and lab manager Russell Noftsker's Symbolics. The sometimes contentious history of these companies can be found documented elsewhere on the web.

As for the name "Moby Memory": there was once a company (now defunct) called Moby Memory UK Ltd. I don't know the origin of their name, but the use of the word "moby" in this context is not very common outside of hacker jargon. They distributed on-line memory cards, mobile phone memory cards, memory sticks, and many other products and accessories. One of the memory products they carried was a "microSD" memory card, like the one shown to the right perched on a fingertip. At the time this blog entry was originally written, these came in capacities of up to 32 gigabytes (that is, 34,359,738,368 bytes). They now come in even larger sizes. They are 15 mm. long by 11 mm. wide by 1 mm. thick (0.59 X 0.43 X 0.04 inches).

As for the name "Moby Memory": there was once a company (now defunct) called Moby Memory UK Ltd. I don't know the origin of their name, but the use of the word "moby" in this context is not very common outside of hacker jargon. They distributed on-line memory cards, mobile phone memory cards, memory sticks, and many other products and accessories. One of the memory products they carried was a "microSD" memory card, like the one shown to the right perched on a fingertip. At the time this blog entry was originally written, these came in capacities of up to 32 gigabytes (that is, 34,359,738,368 bytes). They now come in even larger sizes. They are 15 mm. long by 11 mm. wide by 1 mm. thick (0.59 X 0.43 X 0.04 inches).